Image credits https://eehotwater.com.au/

Detecting leaks has never been easier, new LeakLooker app gives you clean interface to discover, browse and monitor different kind of leaks from many sources. It uses Binary Edge as a discover source and Python, Django, Ajax, Jquery, CSS for web application. I will present how to use it, get best results out of it and examples what is publicly accessible.

Introduction

Almost every week, new security incidents coming up violating privacy of millions of people. Banks, big corporations, army and many other industries have suffered or lost their records or intellectual properties due to misconfiguration of various services like Elastic, Mongo database, Gitlab or Rsync. Many security firms and single researchers dedicated their work to find unprotected database and follow responsible disclosure to prevent abusing exposed data by bad guys.

The idea for both — white and black hats is the same, detect critical information leak (PII, credit cards, IP etc.), analyze the data and inform the owner, if you want to do the right thing. Methods also can’t be much different, use of Binary Edge and other search engine for internet connected device or actively scan the Internet to look for specific ports and service. The things that differ are resources and capabilities, single researchers has limited time and resources to find valuable data but companies with their constant monitoring can spot interesting finding from the crowd easier. Idea behind old LeakLooker script was to find as much potential leaking services as possible and help people that want to check what data could be exposed.

There are thousands of database and services connected to the Internet that shouldn’t be exposed at all, and new ones appear every day so how to manage this amount of data, distinguish potentially valuable leak and monitor for fresh data?

The different kind of search engine

The LeakLooker script comes to 1k stars on Github so from this occassion it has been completely rewritten to the web application.

Dashboard shows you various information about gathered possible leaks, amount of each ones, percentage progress of checked database (marked as ‘confirmed’ or ‘for later review’), random leaks, leaks to check and active notifications.

From now on, every result is saved into database and intuitive interface allows to browse, delete and confirm each finding. You can search by type or couple types at once, by keyword, network, country or page, but lets start from the beginning.

The whole process consist of three steps

1. Discover

First, you have to populate the database by searching for any kind of available services, you have choice between 11 types (maybe more will come)

It also allows to do fast comparison between your database and available results from Binary Edge, by clicking orange “count” button you will get amount of records in your database and blue button shows number of all exposed services.

More about types and queries you can read in my previous articles.

Screenshot below shows results for keyword “backup” for Elastic. It’s clearly visible, there are 546 results, i.e. 27 pages to scrape all Elastic databases info with word “backup” in collection, hostname etc. On this step you should not go directly to the databases yet, just collect all of the results with your inputs. You can also scrape results without the keyword but page by page and look for interesting collection later.

It’s possible to search by multiple types at the same time, as screen below presents for keyword “internal”. It shows number and first page results for each type. After that we can go to specific type and download rest of the results knowing what the amount of each type is. For example, to get rest of the Gitlab results, you should search by type pages 2 and 3.

It’s worth to notice that only unique IPs are kept in database, if your search results don’t return anything you will be informed that your database is up to date.

2. Browse

Since database has been populated it’s time to review the results. You can check all at once in “database” tab, sort them or search for extra keywords. I would recommend to browse by type because table is more readable and adjusted columns name specifically for this type of service.

Each type has a specific table, for elastic — indices are extracted, for mongo — database names or keyspaces for Cassandra. In the first column, related keyword to the results is shown and other details necessary to keep track of this host.

What makes it cooler — is possibility to confirm your finding (for fastest access anytime) or mark it as “for later review” which means observe this host in case if new records will be added.

Red button delete the host and put it in blacklist so it won’t be listed anymore. It has been done to avoid double work, check each finding once and exclude already reviewed.

3. Monitor

Some of the databases are exposed for short period of time, sometimes even only couple days so it’s really difficult to find them if you don’t have sort of luck. That’s the point where LeakLooker monitoring can help, after you reviewed all the findings and set up monitoring for specific keyword, every 24 hours new results will be compared with current records in database and email send with fresh findings. Of course, it will be also saved into database.

This feature works only on gmail and you must login to the account via browser first.

To be more precise, you should choose your keyword wisely and adapt it to the type and things you look for. Example keyword “payment” may appear in Mongo as database name, in Elastic as collection name and in Gitlab as description. For directory — you should search for sql or pem files.

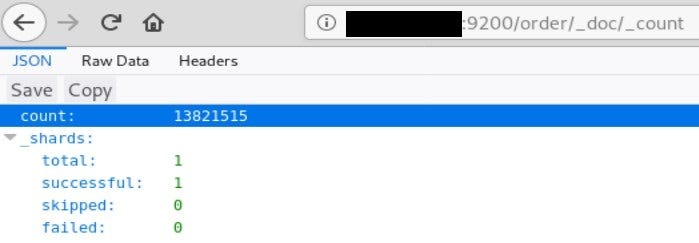

Case study — 13 milions PII with partially censored credit cards

I already proofed in previous articles about LeakLooker, what can be found and it’s not worth the effort to look and report new ones, but during tests I came across one interesting Elastic search database that if misused might lead to serious data breach and putting all users at risk.

“Order” collection weights 49gb and it’s the most interesting one here. Also prospect contains information about potential clients and their personal data.

As name suggests it keeps the customer orders

So basically, a lot of information is exposed, email, credit card owner, expiry date and number with 6 censored numbers.

If it’s not enough, shipping address in some cases was also exposed, it allows to track each person that used the service.

To sum up, information includes:

- First name

- Last Name

- CC expiry date

- Partially censored CC number

- Shipping address

Some records also contained shop name as a gateway and it was clearly fraudulent diet/body mass shops —secondchanceketo[.].com, mecticmenshealth[.]com, secondchance-detox[.]com, sjrejuvenateskincream[.]com or donkketoslim[.]com. See similarities? This investigation of fraudulent shops deserves separate article.

There are a lot of opportunities to abuse collected data but in my opinion the most successful one would be to impersonate company and send phishing message to each email in database asking for subscription renewal or similar to get full credit card dump.

Having listed information it’s very plausible that person will take a bait and give his credit card information on phishing website. Good phishing depends only on your imagination but this exposed data makes the task trivial.

Certificate on port 80 points to website adtracktiv.com and not much is known about the company besides that it’s a chargeback management program and it’s related to another one dubbed FraudWrangler.

Main goal of FraudWrangler is Electronic monitoring of credit card activity to detect fraud via the internet; Software as a service (SAAS) services featuring software for identifying, managing, and rectifying fraudulent payment card transactions.

https://tsdr.uspto.gov/#caseNumber=88093990&caseType=SERIAL_NO&searchType=statusSearch

Again, apart from this general description there is not much on the Internet about this company and for sure there is no security contact on their site, or contact whatsoever. I approached Bob Diachenko, he sent message to the email he found in database and it has been secured with no word of response, as far as I am aware.

I’m not surprised given sensitive nature of the leak and fragile industry. The one of the first entries I was able to find was from May 2017, so practically from the beginning of company. I was observing the database and everyday new records were coming, so I assume it was real-time data.

Conclusion

For attackers — this technique opens many new doors and new attack vectors. One can use exposed data to directly attack the customers or escalate his privilege thanks to exposed API keys, private keys, tokens, credentials or config files.

For researchers — New LeakLooker allows to create database of potential leaks and easy management of whole collection. It improves process of finding and reporting exposed services. If you come across something critical, please report it and make Internet safer place, even a little bit.

Originally published on 21st of March, 2020

Please subscribe for early access, new awesome things and more.