Originally published on October 29th, 2017

TL;DR

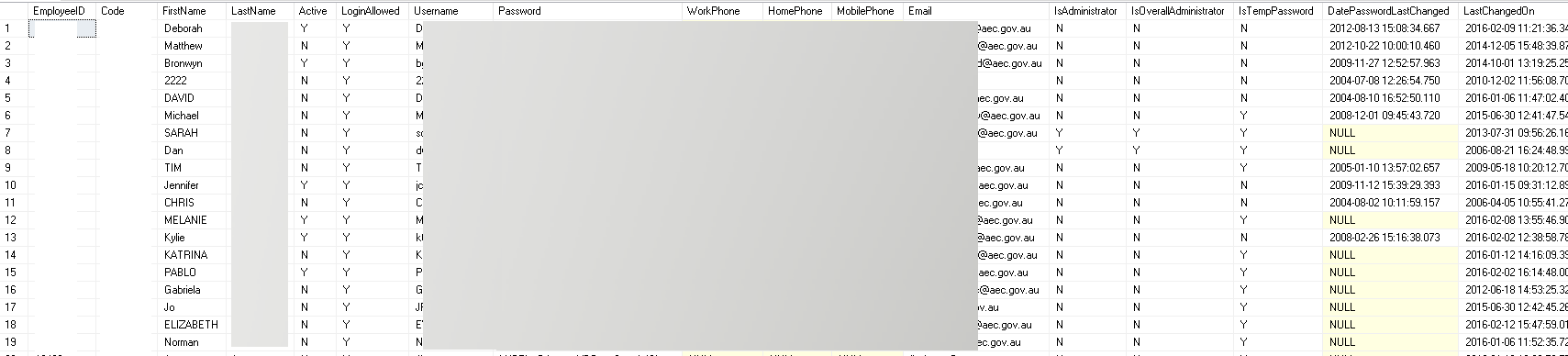

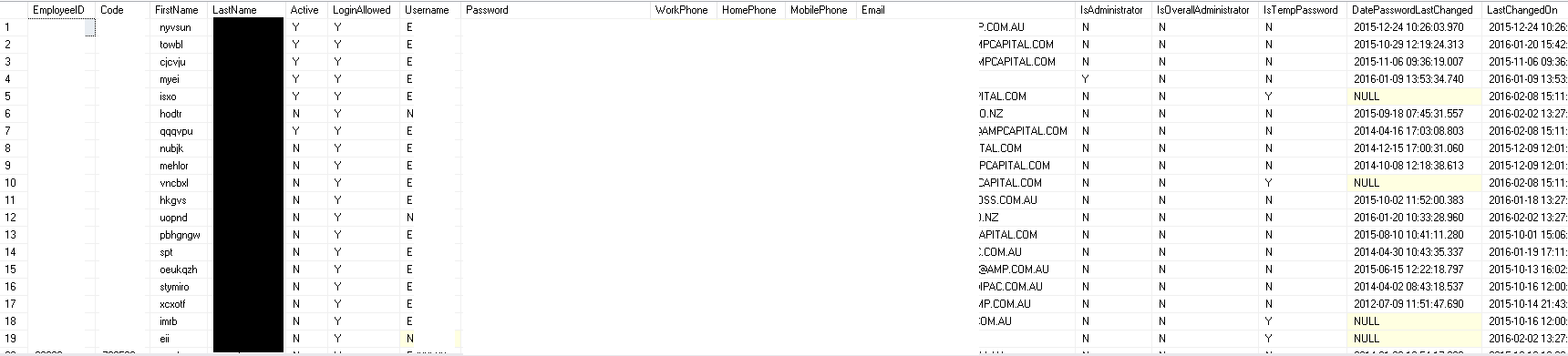

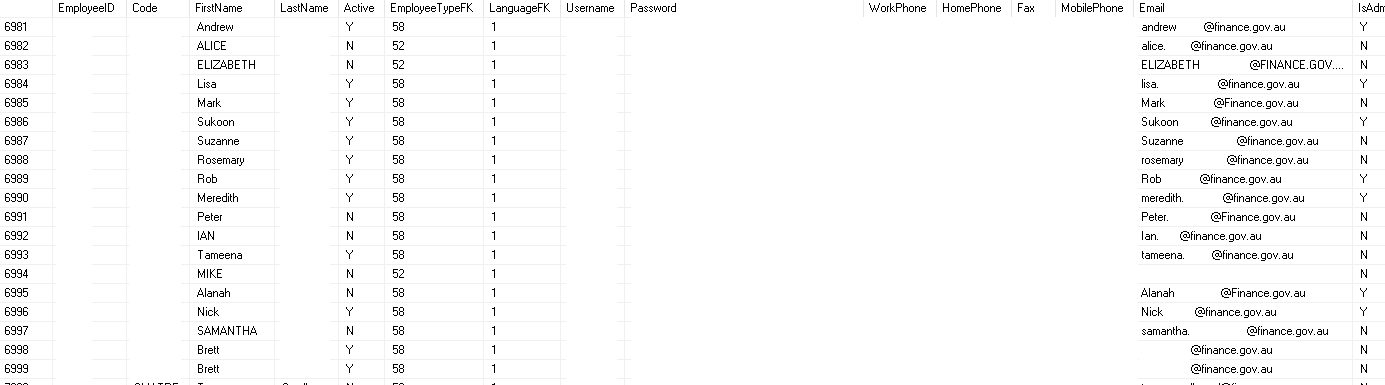

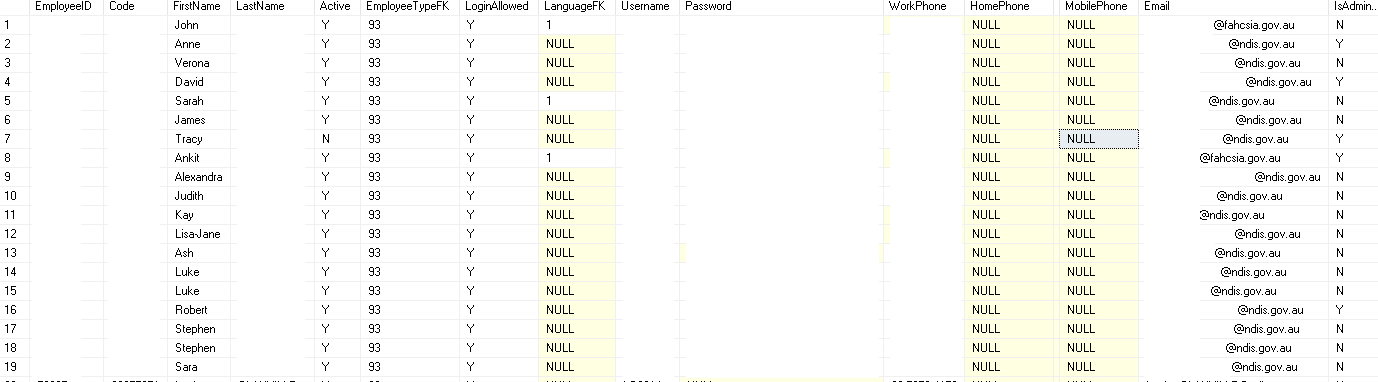

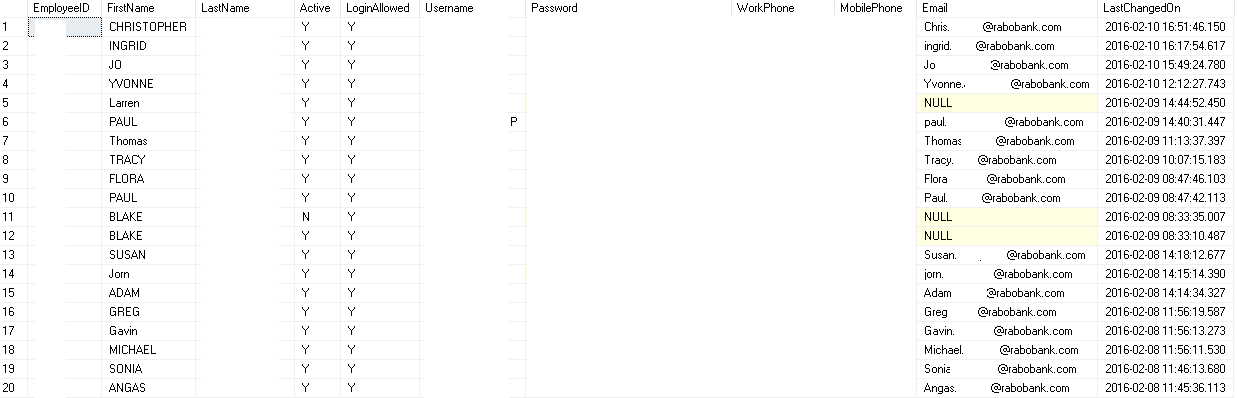

With help of AWS3dump and my poor coding skills I found database backups from 2016, on public amazon storage containing 1470 records from AEC (Australian Electoral Commission), 3000 from Australian Department of Finance, 300 from NDIS (National Disability Insurance Scheme Australia), 17000 from UGL Limited, 25000 from AMP Limited and 1500 from Rabobank.

**Update

This story was covered by the Australian media.

Introduction

When I’ve previously read article about publicly accessible Amazon S3 buckets, which allow anyone access to secret, confidential or internal data, I couldn’t believe it. In order to find out if that was true, I prepared a dictionary, tool for bruteforcing and also post-exploitation scripts to test the theory.

Preparation

Keep in mind that bucket are the same thing as subdomain of s3.amazonaws.com and it may be bruteforced in this same way. Small problem appears when access to bucket is limited or denied. We need to throw away domains with “Access Denied” as a code from bucket’s response. When it returns listing directory, then we know that access is set to public (at least listing is accessible). This was description in two sentences, I strongly encourage you to get familiar with possibilities of Jordanpotti’s tool AWSBucketDump. With good dictionary it can find many interesting things. About dictionary, some of the buckets have simple one-word names but from the other hand many of them are called something like “prod3-company1-webstorage” or “dev-storage1-www.company.com".

Looking for buckets

There are couple tools on Github, which can bruteforce domains or you can just write simple one on your own. AWSBucketDump is exactly what I was looking for, it can grep for particular words and download interesting files. Armed with mentioned weapons I started looking for super-secret-military-multi-galaxy information to sell this on dark net (just kidding, I would find someone in clearnet too). At the end, script found about 1000 domains (I tested with the simple dictionary, though). I didn’t use -D option, which would download files cause I needed only names. First, I was manually reviewing domains with “dev”, “stage”, “prod” in the names. It was boring, especially when my browser wasn’t interpreted XML well. In this case, I needed another post-exploitation semi-automatic tool to check for possible loot.

Amazon Roulette

I had addresses of 1000~ s3 domains and in one of them may be something worthwhile (or not). This is moment when fun begins, I was looking for bak, xls(x), zip, pdf, doc(x) and csv files. Script got name Amazon Roulette cause you can draw a random file and check if it’s accessible. I thought, best way is to look for extensions instead of file names (but you can retrieve this as well). If you have file with list of buckets in form “*.s3.amazonaws.com” you can pass it to the script. It connects to each bucket and prints all of the met extensions for further analysis. To display files with chosen extension, just type it or go to next target by pressing N. I did not care about jpg, mp3 or png files, so when I found this kind of bucket I was passing through this domain instantly. Let’s assume script found .csv files and prints this out, then you can check random file and script will reply with his full path and code 200, otherwise code 403. There is possibility to check all files with chosen ext and this method is more precisely but less funny.

Find below screen describing usage.

Loot

I was very skeptical about the whole Amazon s3 thing but with little effort I discovered open bucket with database backups from AEC (Australian Electoral Commission), Department of Finance in Australia, NDIS (National Disability Insurance Scheme) in Australia, UGL Limited, AMP Limited and Rabobank. All of the tables schema look this same so I assume that one company was responsible for maintenance and backups in this authorities.

Most interesting table is “Employee”, which include emails, passwords, first names, last names, IDs, phones numbers and more. There were also tables “Salaries”, “Invoices”, “Travels” but contained just pieces of data. Second important things was table “Notes”, where employees was writing their things or logs were generated there. After quick look, I stumbled upon credit cards numbers (most of them was canceled but didn’t check all) and user notes and notifications. Some of the records were duplicated, so real numbers are higher. Additionally, it’s not fresh dump, all of the backups were made approx. March 2016.

Who is the owner?

Okay, I’m in possession of big amount of confidential data including Australian government. It wasn’t hack or something sophisticated, just one small fault which could lead to havoc in these organizations (or not?). I’m white hat and don’t do this for money in any way, so I wanted to report this as fast as possible. After I downloaded first backup, from AMP Limited, I tweeted to them and wrote email but no one gave a damn. I decided to check rest of the files, then I’ve spotted NDIS and other Australian organizations so I reported it to ACORN (Australian Cybercrime Online Reporting Network) and ACSC (Australian Cyber Security Centre). After one day, someone from Australian Department of Defence replied to me.

I was curious about this “leak” so I asked about bucket’s owner, passwords(SHA1 with no salt, by the way), affected companies etc, most of my questions weren’t answered but I thought so. He was not proper person to ask this kind of questions so now I’m in touch with Defence Media and after quick emails exchange, I’m still waiting for reply. I will update this post as soon as I get more information.

Conclusion

Nothing to see here, just another open S3 bucket with confidential data. To the people, who looking for this kind of things I can say, keep digging. Every few weeks I read about another “leak”. To people, who manage S3 buckets, just use access lists.

You can check script here

Originally published on 29th of August, 2017